EgoVision and Human Augmentation for Cultural Heritage

Augmented Reality and Humanity present the opportunity for more customization of the museum experience, such as new varieties of self-guided tours or real-time translation of interpretive. At the end of this year several companies will release wearable computers with a head-mounted display (such as Google or Vuzix). We would like to investigate the usage of these devices for Cultural Heritage applications.

Augmented reality is a real-time direct or indirect view of a physical real-word environment that has been enhanced/augmented by adding virtual computer-generated information on it. Augmented Reality aims at simplifying the user’s life by bring virtual information not only to his immediate surroundings, but also to any indirect view of the real-world environment, such as live-video stream. AR enhances the user’s perception of and interaction with the real world. While Virtual reality technology or virtual Environment as called by Milgram, completely immerses users in a synthetic world without seeing the real world, AR technology augments the sense of reality by superimposing virtual object and cues up the real world in real time. For this reason, augmented reality is destined to play a role in the future of education and cultural heritage providing a more effective way to enable learners or visitors to access content.

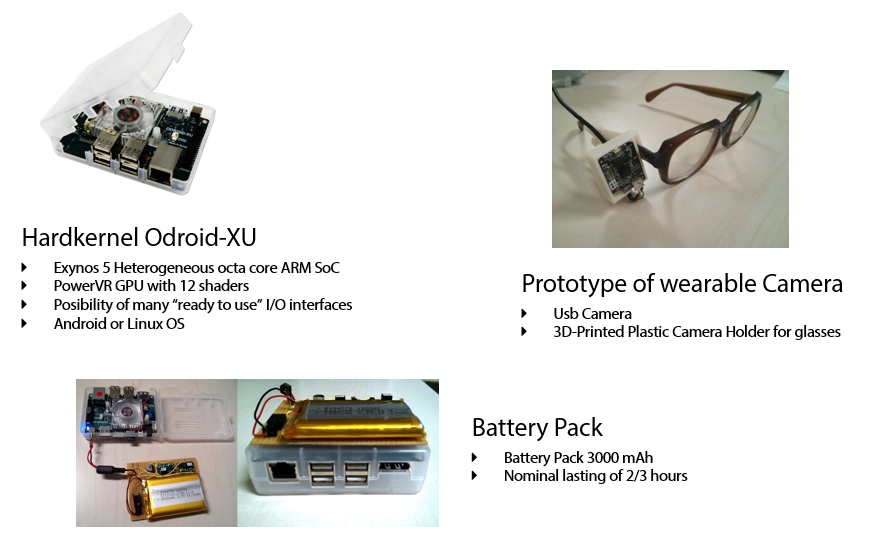

We are designing our first prototype for Egovison and augmented humanity.

We are using a development board made by hardkernel named Odroid-XU. It embeds an Octa core big.Little ARM SoC, with a PowerVR GPU. The board is powered by a battery pack to make it portable and we 3d-printed a glasses holder for usb camera. This system is designed as development wearable platform to easily capture videos of your everyday active life from your point-of-view. Moreover, it will enable to deploy vision algorithm on board and to interact with remote devices and displays.

In order to achieve detailed models of human interaction and object manipulation, it important to detect hand regions and recognize gesture. Hand detection is an important element of such tasks as gesture recognition, hand tracking, action recognition and understanding hand-object interactions. We designed a hand detection and gesture recognition algorithm that can segment hands and recognize static gestures.

Publications

| 1 |

Baraldi, Lorenzo; Paci, Francesco; Serra, Giuseppe; Cucchiara, Rita

"Gesture Recognition using Wearable Vision Sensors to Enhance Visitors' Museum Experiences"

IEEE SENSORS JOURNAL,

vol. 15,

pp. 2705

-2714

,

2015

| DOI: 10.1109/JSEN.2015.2411994

Journal

|

| 2 |

Baraldi, Lorenzo; Paci, Francesco; Serra, Giuseppe; Benini, Luca; Cucchiara, Rita

"Gesture Recognition in Ego-Centric Videos using Dense Trajectories and Hand Segmentation"

Computer Vision and Pattern Recognition Workshops (CVPRW), 2014 IEEE Conference on,

Columbus, Ohio,

pp. 702

-707

,

23-28 June 2014,

2014

| DOI: 10.1109/CVPRW.2014.107

Conference

|

| 3 |

Serra, Giuseppe; Camurri, Marco; Baraldi, Lorenzo; Michela, Benedetti; Cucchiara, Rita

"Hand Segmentation for Gesture Recognition in EGO-Vision"

Proceedings of the 3rd ACM international workshop on Interactive multimedia on mobile & portable devices,

Barcelona, Spain,

pp. 31

-36

,

21 October 2013,

2013

| DOI: 10.1145/2505483.2505490

Conference

|